With so much of the population sheltered at home in response to the COVID-19 pandemic, people are spending more time sharing and watching online videos than ever before. According to a recent Comscore analysis, the number of households that enjoyed video streaming services in March 2020 increased by nearly 50% in comparison with the previous year, while the total number of streaming hours rose by up to 20%.

And this remarkable uptick in streaming activity isn’t just about entertainment providers like Netflix, Amazon, and Hulu. In our quest to maintain a sense of normalcy and continuity during these days of uncertainty, many of us are finding creative ways to stay productive and connected with relatives, friends, and colleagues with the help of video streaming.

Whether it’s online family gatherings, video conferences with remote coworkers or educational instructors, or even live-streamed religious services, the stability, reliability, and quality of online video delivery is now of the utmost importance. As web hosting providers, we all strive to provide the best experience possible for our customers when they share and view video content in these unprecedented times.

In this article, we’ll look at the common problems that can affect the quality of online video and then explore some possible solutions that can help us improve the live streaming experience for our customers.

Common problems with live streaming

Let’s examine the two main issues that users typically experience when streaming video: latency and buffering.

What is latency?

Latency refers to the amount of time it takes for a browser to request a video stream from a server and for the server to process that request and deliver the stream. In short, latency is the time delay we experience when streaming videos. If we lived in an ideal world connected through perfect networks, we would enjoy zero-latency streaming: video content delivered to our browsers with no delay for a truly instantaneous, real-time experience.

Of course, our world of technology is less than perfect so we will always have a slight lag in video playback even under the best network conditions. The more realistic goal here is to deliver low latency streams with delays so close to zero that they are virtually imperceptible to viewers.

What causes video latency? Some factors include:

- Physical limitations of network equipment such as transmission towers or fiber optic cables

- The propagation time of data packets

- The number of hops a packet makes from router to router

- Intermediate storage delays as a packet passes through a network switch or bridge

What is buffering?

Buffering refers to the process of downloading a certain amount of data into the local computer’s memory, or buffer, before playing the video segment consisting of that data.

When it works like it’s supposed to, buffering gives us the experience of smooth, continuous playback. We watch one segment of the streaming video while the next buffering segment loads in the background. By the time we reach the end of the current segment, the next segment has finished loading into the buffer and begins playing without interruption.

Problems can happen, however, when the download rate slows down so much that buffering becomes noticeably ineffective. In this case, we experience frustrating interruptions when the video player pauses at the end of a segment as the next buffering load struggles to catch up with the playback.

Slow downloads can be attributed to a variety of causes including reduced network bandwidth, a faulty or unstable internet connection, or an inadequate graphics processor. Buffering issues can also result from traffic bottlenecks such as when a web hosting provider gets swamped by a surge of requests during, say, a prime time event or even a global pandemic.

CDN: a step in the right direction

A content delivery network (CDN) helps web hosting providers deliver faster streams with reduced video latency and buffering issues. A CDN accomplishes this by doing two main things.

Distributed servers at the network edge

In a traditional web hosting scenario, video files reside on an origin server in the central cloud. When a user requests a video stream, the origin server responds by processing the request and sending the data through the internet to the user’s browser. If this server gets overloaded or if data traffic runs into a network bottleneck, the user will encounter degraded speed and quality in the live streaming.

With a CDN, on the other hand, content processing and transmission responsibilities are shared by a group of servers strategically distributed across different locations at the network edge. When a user wants to stream video, the CDN allocates the request to the edge server that’s closest to the user’s geographical location. The shorter and more efficient transport path means fewer hops from router to router and lower latency times for the streaming content.

Content caching

In addition, each edge server in a CDN uses caching to improve the video streaming experience for users.

The first time that a user requests a video stream, the CDN delivers the content and also stores—or caches—it on the nearest edge server. When another user in the region requests that same stream, the edge server can deliver the video content directly from its cache instead of having to forward the request all the way back to the origin server.

Cached delivery helps to accelerate the download process and reduce traffic congestion, which results in faster and smoother buffering.

Evaluating a CDN

With ever-increasing network traffic and a growing number of users across the globe, CDN technology certainly represents a good start towards boosting the quality, speed, and reliability of video streaming. Nevertheless, a CDN’s performance can vary based on specific conditions and use cases, and not all providers can guarantee the same level of service.

Choosing a CDN for live streaming is much like choosing a CDN for an overall website. In both cases, it pays to be selective. Does the CDN have the right capabilities? Can it handle the volume of video content and network traffic that we need to serve to our customers?

There are many factors to weigh when evaluating a CDN, but we’ll focus here on two of the most important considerations.

Point of Presence (PoP)

Point of Presence is perhaps the single most important characteristic to look for in a CDN, but what is it exactly?

For the sake of simplicity, we talked earlier about the edge server physically closest to a user, as if a single server handled all the data traffic passing through an edge location. In actuality, there’s a bit more complexity to this picture.

Each edge location consists of not just one but many servers, all housed in a physical data center known as the Point of Presence or PoP. Each server in the PoP handles a portion of the caching and delivery responsibilities for users serviced by that edge location. In short, PoP represents the technology infrastructure needed to make an edge location operational for a CDN.

It’s not hard to see how the amount of PoP may dictate how fast our customers can access our video streaming content.

A PoP data center that contains many caching servers equipped with high-capacity storage drives and lightning-fast processors can logically handle more request traffic with less congestion than a smaller PoP that houses only a few lower-capacity machines. The CDN represented by the former has a greater Point of Presence—at least in this particular edge location, since data center capabilities may vary between locations even within the same CDN.

Besides the functional capacity of a PoP, the total number and placement of PoPs matter as well. A CDN with a greater number of PoPs strategically placed in edge locations all around the world can naturally serve more users with lower latency than a smaller CDN with fewer PoPs, where user requests may have to travel long distances to reach a server.

Cloudflare, for example, operates around 200 PoPs across 90 countries, with a greater density of servers placed in key metropolitan centers.

Availability

Availability represents the ability of users to access the resources and services of a CDN. Also known as uptime, availability is typically expressed as the percentage of time that the CDN is operational and accessible. Amazon Cloudfront, for example, pledges to maintain a 99.9% service availability for its customers.

In essence, the number and frequency of data center outages drive the availability percentage. A partial outage—one isolated to a single or a few servers—is not uncommon and may impact availability on a smaller scale as the CDN provider moves swiftly to implement a failover solution. A total outage, on the other hand, can have disastrous effects as entire websites go down from lost services and become inaccessible to visitors.

The massive July 2, 2019 outage of Cloudflare, for example, presented an eye-opening lesson for the internet community. For about 30 minutes, Cloudflare shut down its processes in response to a mysterious CPU spike. The total service disruption affected websites globally and raised awareness about the risks of relying on a single CDN provider for network resources.

If even a giant CDN provider like Cloudflare can go down without warning, where can we turn for assured availability and failover solutions?

Up a notch: Multi CDN

A Multi CDN solution leverages the power of several CDNs working in combination to accelerate video delivery globally and offer a failover system when something goes awry at the network edge. You can learn more about Multi CDN in another article: Multi CDN: what is it and why businesses are adopting it.

Having a choice of service providers in a Multi CDN means that we can switch to the best-performing CDN for a specific locality when we need to optimize live streaming for, say, customers stuck at home under regional lockdown orders. And in the event of a CDN malfunction or outage, we can swiftly fall back on an alternative provider that has the immediate uptime to keep our websites operational.

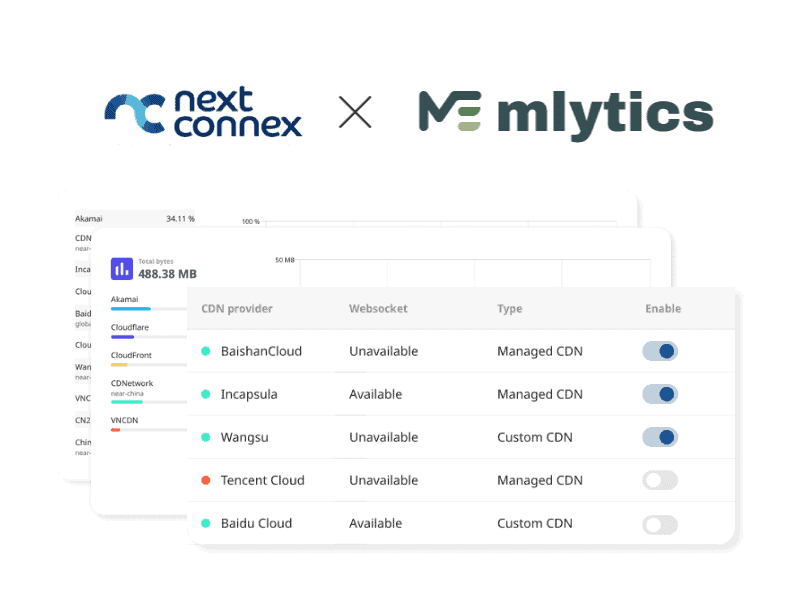

There are different ways to implement a Multi CDN, and all require some degree of customization and manual configuration. Fortunately, Mlytics has spent years perfecting a robust CDN solution for web hosting and media delivery providers who need it the most.

With a turnkey solution engineered and packaged by the experts at Mlytics, Multi CDN implementation has never been simpler. Visit the Mlytics page today to learn more about how a Multi CDN can help turn unwanted video latency into a thing of the past.